|

| VIP-Based Database Availability Design |

This blog article describes in some detail how virtual IP addresses work, specifically the behavior of Address Resolution Protocol (ARP) which is a core part of the TCP/IP stack that maps IP numbers to hardware addresses and permits VIPs to move. We will then dig into how split-brain arises as a consequence of ARP behavior. Finally we will look at ways to avoid split-brain or at least make it less dangerous when it occurs.

Note: the examples below use MySQL, in part because mysqld has a convenient way to show the server host name. However you can set up the same test scenarios with PostgreSQL or most other DBMS implementations for that matter.

What is a Virtual IP Address?

Network interface cards (NICs) typically bind to a single IP address in TCP/IP networks. However, you can also tell the NIC to listen on extra addresses using the Linux ifconfig command. Such addresses are called virtual IP addresses or VIPs for short.

Let's say for example you have an existing interface eth0 listening on port 192.168.128.114. Here's the configuration of that interface as shown by the ifconfig command:

saturn# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 08:00:27:ce:5f:8e

inet addr:192.168.128.114 Bcast:192.168.128.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fece:5f8e/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:10057681 errors:0 dropped:0 overruns:0 frame:0

TX packets:8902384 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:6941125495 (6.9 GB) TX bytes:6305062533 (6.3 GB)saturn# ifconfig eth0:0 192.168.128.130 upmysql -utungsten -psecret -h192.168.128.114 (or)

mysql -utungsten -psecret -h192.168.128.130saturn# ifconfig eth0:0 192.168.128.130 down

...

neptune# ifconfig eth0:0 192.168.128.130 upHow Virtual IP Addresses Work

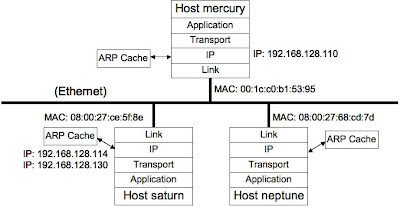

To understand the weaknesses of virtual IP addresses for database high availability, it helps to understand exactly how the TCP/IP stack actually routes messages between IP addresses, including those that correspond to VIPs. The following diagram shows moving parts of the TCP/IP stack in a typical active/passive database set-up with a single client host. In this diagram host saturn currently has virtual IP address 192.168.128.130. Neptune is the other database host. Mercury is the client host.

Applications direct TCP/IP packets using IP addresses, which in IPV4 are four byte numbers. The IP destination address is written into the header by the IP layer of the sending host and read by the IP layer on the receiving host.

However, this is not enough to get packets from one host to another. At the hardware level within a single LAN, network interfaces use MAC addresses to route messages over physical connections like Ethernet. MAC addresses are 48-bit addresses that are typically burned into the NIC firmware or set in a configuration file if you are running a virtual machine. To forward a packet from host mercury to saturn, the link layer writes in the proper MAC address, in this case 08:00:27:ce:5f:8e. The link layer on host saturn accepts this packet, since it corresponds to its MAC address. It forwards the packet up into the IP layer for acceptance and further processing.

Yet how does host mercury figure out which MAC address to use for particular IP addresses? This is the job of the ARP cache, which maintains an up-to-date mapping between IP and MAC addresses. You can view the ARP cache contents using the arp command, as shown in the following example.

mercury# arp -an

? (192.168.128.41) at 00:25:00:44:f3:ce [ether] on eth0

? (192.168.128.1) at 00:0f:cc:74:64:5c [ether] on eth0# mysql -utungsten -psecret -h192.168.128.130

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 33826

...

mysql>mercury# tcpdump -n -i eth0 arp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 96 bytes

09:37:43.755081 ARP, Request who-has 192.168.128.130 tell 192.168.128.110, length 28

09:37:43.755360 ARP, Reply 192.168.128.130 is-at 08:00:27:ce:5f:8e, length 46# arp -an

? (192.168.128.130) at 08:00:27:ce:5f:8e [ether] on eth0

? (192.168.128.41) at 00:25:00:44:f3:ce [ether] on eth0

? (192.168.128.1) at 00:0f:cc:74:64:5c [ether] on eth0Virtual IP Addresses and Split-Brain

Most real problems with VIPs appear when you try to move them. The reason is simple: TCP/IP does not stop you from having multiple hosts listening on the same virtual IP address. For instance, let's say you issue the following command on host neptune without first dropping the virtual IP address on saturn.

neptune# ifconfig eth0:0 192.168.128.130 upmercury # arp -d 192.168.128.130

root@logos1:~# mysql -utungsten -psecret -h192.168.128.130

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 294

...

mysql># tcpdump -n -i eth0 arp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 96 bytes

09:59:32.643518 ARP, Request who-has 192.168.128.130 tell 192.168.128.110, length 28

09:59:32.643768 ARP, Reply 192.168.128.130 is-at 08:00:27:68:cd:7d, length 46

09:59:32.643793 ARP, Reply 192.168.128.130 is-at 08:00:27:ce:5f:8e, length 46 You can demonstrate the randomness for yourself with a simple experiment. Let's create a test script named mysql-arp-flush.sh, which clears the ARP cache entry for the VIP and then connects to MySQL, all in a loop.

#!/bin/bash

for i in {1..5};

do

arp -d 192.168.128.130

sleep 1

mysql -utungsten -psecret -h192.168.128.130 -N \

-e "show variables like 'host%'"

doneIf you run the script you'll see results like the following. Note the random switch to Neptune on the fourth connection. # ./mysql-arp-flush.sh +----------+---------+ | hostname | saturn | +----------+---------+ +----------+---------+ | hostname | saturn | +----------+---------+ +----------+---------+ | hostname | saturn | +----------+---------+ +----------+---------+ | hostname | neptune | +----------+---------+ +----------+---------+ | hostname | saturn | +----------+---------+At this point you have successfully created a split-brain. If you use database replication and both databases are open for writes, as would be the default case with MySQL replication, Tungsten, or any of the PostgreSQL replication solutions like Londiste, your applications will randomly connect to each DBMS server. Your data will quickly become irreparably mixed up. All you can do is hope that the problem will be discovered quickly.

A Half-Hearted Solution using Gratuitous ARP

You might think that it would be handy if the ARP protocol provided a way to get around split-brain problems by invalidating client host ARP caches. In fact, there is such a feature in ARP--it's called gratuitous ARP. While useful, it is not a solution for split-brain issues. Let's look closely to see why.

Gratuitous ARP works by sending an unsolicited ARP response to let hosts on the LAN know that an IP address mapping has changed. On Linux systems you can issue the arping command as shown below to generate a gratuitous ARP response.

neptune# arping -q -c 3 -A -I eth0 192.168.128.130# tcpdump -n -i eth0 arp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 96 bytes

11:02:27.154279 ARP, Reply 192.168.128.130 is-at 08:00:27:68:cd:7d, length 46

11:02:28.159291 ARP, Reply 192.168.128.130 is-at 08:00:27:68:cd:7d, length 46

11:02:29.162403 ARP, Reply 192.168.128.130 is-at 08:00:27:68:cd:7d, length 46mercury# arp -an

? (192.168.128.130) at 08:00:27:68:cd:7d [ether] on eth0

? (192.168.128.41) at 00:25:00:44:f3:ce [ether] on eth0

? (192.168.128.1) at 00:0f:cc:74:64:5c [ether] on eth0

#!/bin/bash

for i in {1..30};

do

sleep 1

mysql -utungsten -psecret -h192.168.128.130 -N \

-e "show variables like 'host%'"

doneWhile the test script is running is running, we run an arping command from saturn. saturn# arping -q -c 3 -A -I eth0 192.168.128.130What we see in the MySQL output is the following. Once the gratuitous ARP is received, mercury switches its connection from neptune to saturn and stays there, at least for the time being.

mercury# ./mysql-no-arp-flush.sh

+----------+---------+

| hostname | neptune |

+----------+---------+

+----------+---------+

| hostname | neptune |

+----------+---------+

+----------+---------+

| hostname | saturn |

+----------+---------+

+----------+---------+

| hostname | saturn |

+----------+---------+root@logos1:~# mysql -utungsten -psecret -h192.168.128.130

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 33853

mysql> show variables like 'hostname';

+---------------+---------+

| Variable_name | Value |

+---------------+---------+

| hostname | neptune |

+---------------+---------+

1 row in set (0.00 sec)saturn# arping -q -c 3 -A -I eth0 192.168.128.130Finally, go back and check the host name again in the MySQL session. The session switches over to the other server, which you see at the client level as a lost connection followed by a reconnect.

mysql> show variables like 'hostname';

ERROR 2006 (HY000): MySQL server has gone away No connection. Trying to reconnect... Connection id: 33854

Current database: *** NONE ***

+---------------+--------+

| Variable_name | Value |

+---------------+--------+

| hostname | saturn |

+---------------+--------+

1 row in set (0.00 sec)First, not all TCP/IP stacks even recognize gratuitous ARP responses. Second, gratuitous ARP only takes effect on hosts that actually have a current mapping in their ARP cache. Other hosts will wait until they actually need the mapping and then issue a new ARP request. Finally, ARP mappings automatically time out after a few minutes. In that case the host will issue a new ARP request, which as in the two preceding cases brings us right back to the split-brain scenario we were trying to cure.

Avoiding Virtual IP Split -Brains

Avoiding a VIP-induced split-brain not a simple problem. The best approach is combination of sound cluster management, amelioration, and paranoia.

Proper cluster management is the first line of defense. VIPs are an example of a unique resource in the system that only one host may hold at a time. An old saying that has been attributed to everyone from Genghis Khan to Larry Ellison sums up the problem succinctly:

It is not enough to succeed. All others must fail.The standard technique to implement this policy is called STONITH, which stands for "Shoot the other node in the head." Basically it means that before one node acquires the virtual IP address the cluster manager must make every effort to ensure no other node has it, using violent means if necessary. Moving the VIP thus has the following steps.

- The cluster manager executes a procedure to drop the VIP on all other hosts (for example using ssh or by cutting off power). Once these procedures are complete, the cluster manager executes a command to assign the VIP to the new owner.

- Isolated nodes automatically release their VIP. "Isolated" is usually defined as being cut off from the cluster manager and unable to access certain agreed-upon network resources such as routers or public DNS servers.

- In cases of doubt, everybody stops. For most systems it is far better to be unavailable than to mix up data randomly.

Incidentally, you want to be very wary about re-inventing the wheel, especially when it comes to DBMS clustering and high availability. Clustering has a lot of non-obvious corner cases; even the "easy" problems like planned failover are quite hard to implement correctly. You are almost always better off using something that already exists instead of trying to roll your own solution.

Amelioration is the next line of defense, namely to make split-brain situations less dangerous when they actually occur. Failover using shared disks or non-writable slaves (e.g., with DRBD or PostgreSQL streaming replication) have a degree of protection because it is somewhat harder to have multiple databases open for writes. However, it is definitely possible and the cluster manager is your best bet to prevent this. However, when using MySQL with either native or Tungsten replication, databases are open and therefore susceptible to data corruption, unless you ensure slaves are not writable.

Fortunately, this is very easy to do in MySQL. To make a database readonly to all accounts other than those with SUPER privilege, just issue the following commands to make the server readonly and ensure the setting is in effect:

neptune# mysql -uroot -e "set global read_only=1"

neptune# mysql -uroot -e "show variables like 'read_only'"

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| read_only | ON |

+---------------+-------+Lastly paranoia is always a good thing. You should test the daylights out of clusters that depend on VIPs before deployment, and also check regularly afterwards to ensure there are no unexpected writes on slaves. Regular checks of logs are a good idea. Another good way to check for problems in MySQL master/slave setups is to run consistency checks. Tungsten Replicator has built-in consistency checking designed for exactly this purpose. You can also run Maatkit mk-table-checksum at regular intervals. Another best practice is to "flip" masters and slaves on a regular basis to ensure switch and failover procedures work properly. Don't avoid trouble--look for it!

Conclusion and Note on Sources

Virtual IP addresses are a convenient way to set up database high availability but can lead to very severe split-brain situations if used incorrectly. To deploy virtual IP addresses without problems you must first of all understand how they work and second use a sound cluster management approach that avoids split-brain and minimizes the impact if it does occur. As with all problems of this kind you need to test the implementation thoroughly before deployment as well as regularly during operations. This will help avoid nasty surprises and corrupt data that are otherwise all but inevitable.

Finally it is worth talking a bit about sources. I wrote this article because I could not find a single location that explained virtual IP addresses in a way that drew out the consequences of their behavior for database failover. That said, there are a couple of good general sources for information on Internet tools and high availability:

- http://linux-ip.net/ -- Guide to IP Layer Network Administration with Linux.

- http://linux-ha.org/ -- Linux HA wiki pages

14 comments:

awesome post! thanks.

Thanks for this article...

Really good explaination.

It seems to me you would want to use a proper virtual address sharing mechanism, such as CARP in FreeBSD, that will ensure exactly one server will respond to the IP at a time.

@Vivek, I didn't mention CARP but I have seen this used and it generally seems good. The basic point is you need *some* kind of cluster manager to manage VIPs. Our own Tungsten product has a fairly sophisticated scheme for VIP management. I have also used Heartbeat, which is quite similar. Heartbeat is integrated into PaceMaker, which is mentioned in the article.

The VRRP part of keepalived is also a common way to handle VIPs on Linux.

Great detail. I've got to re-read it again in detail but it is a good reference for me to describe to clients some of the technical details and issues around VIP's

@Ronald and others! Thanks, glad you liked the article. I hope to dig into cluster manager behavior more fully in a future article.

This is where a true hardware based vip like the one found in Alteon load balancers would help (just an example, I have no stake in Alteon :) ).

The mac address of the VIP doesn't change, no need for ARP monkeying, drawback is that all communication goes through the load balancer that can become a bottleneck in extreme cases.

I wonder if something like that is doable using the linux iptables stack.

@Giorgio. You can go still further and not to use VIPs at all. We have done a lot of work with intelligent SQL proxies. In additional to avoiding ARP weirdness they allow you to do cool things like seamless switch (e.g., flipping a master/slave pair) and load-balancing reads safely onto replicas. I hope to post more extensively in the near future but in the meantime you can look at Tungsten Connector documentation for a sense of the approach (http://www.continuent.com/images/stories/pdfs/tungsten-connector-guide.pdf).

Excellent writeup, Robert. Much appreciated! You covered a lot of the nasty gotchas that I blissfully glossed over in my MySQL HA talk :) Added to the MySQL Librarian!

Thanks for the article. I was looking for this kind of article to undestand VIP as we are going to use VIP for our PostgreSQL database failover.

Very good post !

Great post. Thank you very much good sir.

Nice article :) i have a doubt.. whether virtual IP work across different network switches? will it be a gud solution when the two nodes(master and slave) are wide apart in terms of distance

Post a Comment