In a nutshell, the results were quite good. In the best cases Tungsten parallel apply out-performs single-threaded native replication by about 4.5 to 1. Both Giuseppe and I have verified this using slightly different test methodologies, which helps avoid dumb counting mistakes. Our results also match field tests at a customer site over the previous summer, so we regard them as fairly robust. In the remainder of this article I would like to expand a bit on the details of the benchmarks as well as the results. The results shown here are from my tests.

Benchmark Test Design

Both Giuseppe and I used a similar testbed for replication testing:

- HP Proliant server, dual Xeon L5520 CPUs with hyper-threading enabled, 72Gb of RAM

- 1TB HP Smart Array RAID 1+0

- Centos 5.6

- XFS file system

- MySQL 5.1.57 with InnoDB buffer pool set to 10Gb and using O_DIRECT purge method

- Tungsten Replicator 2.0.5 build 347

The Tungsten Slave is configured as described in a previous article in this blog, except that there are 30 channels instead of 10. The exact installation command is given at the end of this article.

The test run uses sysbench to spread transactions evenly across 30 databases of identical size, then measure time to process them. This is also known as a replication catch-up test.

The test run uses sysbench to spread transactions evenly across 30 databases of identical size, then measure time to process them. This is also known as a replication catch-up test.

- Load all MySQL servers with an identical dataset consisting of 30 databases pre-populated with data from sysbench. Giuseppe wrote a new tool called Large Data Generator that is very helpful for capturing and loading such datasets.

- With the slaves shut down, store the master binlog start position and then run 30 sysbench oltp test processes against the master to update and read from all schemas simultaneously for one hour.

- Start the MySQL slave from the stored master binlog position and measure time to process the sysbench transactions. Shut down the MySQL slave at the end of the test.

- Start the Tungsten slave from the stored master binlog position and measure time to process the sysbench transactions using Tungsten Replicator with 30 channels (i.e. threads).

Database performance is substantially different depending on whether data are fully resident in the buffer pool (cache-resident) or largely read from disk (I/O-bound). Tungsten parallel replication over 30 databases varies from 1.8 to 4.5 depending on which case you look at, as shown in the following table. Processing times are in minutes (m).

Test Scenario

|

Rows/Db

|

Data Size

|

MySQL Slave

|

Tungsten Slave

|

Ratio

|

Cache-resident

|

10K

|

430Mb

|

30m

|

17m

|

1.8

|

I/O-Bound

|

10M

|

68Gb

|

228m

|

51m

|

4.5

|

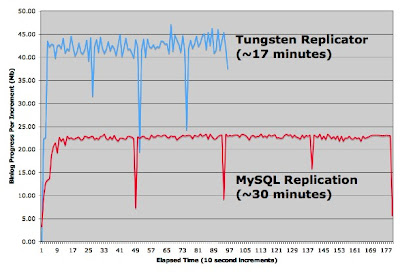

Let's look at the results in detail. In the cache-resident test the base dataset is relatively small and loads fully into the buffer cache within a minute or two. Both MySQL and Tungsten slaves complete in well under an hour. Here is a graph showing throughput as measured in bytes of binlog processed per 10 second increment.

|

| Cache-Resident Slave Catch-Up - MySQL vs. Tungsten Replicator, 30 Databases |

With I/O bound workloads, on the other hand, we see a profound difference in performance. Tungsten Replicator is at least 6x slower than in the cache-resident case, but still processes updates faster than the master (51 minutes on the slave vs. 60 minutes on the master). Buffer cache loading is correspondingly fast and Tungsten reaches steady-state performance within about 20 minutes. MySQL native replication on the other hand is far slower. The slave not only does not catch up, but it would quickly lag far behind the master under this workload. It takes about 90 minutes for native replication even to achieve steady state performance after buffer pool loading.

|

| I/O-Bound Slave Catch-Up - MySQL vs. Tungsten Replicator, 30 Databases |

Further Improvements and Caveats

The current results of parallel replication benchmarks on Tungsten are gratifying especially when you consider that two years ago Tungsten Replicator performance was around 10% of the speed of MySQL replication. Nevertheless, these benchmarks are not the final word. It is clear there is room for optimization as we observe that Tungsten processes the cache-bound binlog at least 6 times faster than the I/O bound workload. Much of the difference seems to be time spent reading from disk. If this could be improved, Tungsten would go even faster.

During the London conference Yoshinori Matsunobu published some excellent performance results using slave pre-fetch, which has encouraged us to build pre-fetch into Tungsten as well. I am curious to see if we can further boost throughput by adding pre-fetching on each parallel thread, though other people at the conference such as Domas Mituzas were not optimistic. Either way, I am certain we will improve performance, if not using pre-fetch then with other tricks like batching inserts.

Finally, some caveats. Our sysbench load is nice because it is evenly distributed across schemas of exactly the same size. Most application workloads do not behave this way, though some do come very close. The slides for my talk discuss practical issues in maximizing performance in real applications. I suspect that a combination of parallelization with pre-fetch will in fact turn out to be a very good solution for a wide variety of workloads.

Fine Print

If you would like to repeat our results (or attack them as fraudulent), here are some parameters that may help. The database settings in the MySQL sandbox instances are as follows:

default-storage-engine=InnoDB

innodb-additional-mem-pool-size=100M

innodb-flush-method=O_DIRECT

innodb-log-buffer-size=4M

innodb-log-file-size=50M

innodb-thread-concurrency=0

innodb_buffer_pool_size=10G

innodb_file_format=barracuda

innodb_file_per_table=1

innodb_flush_log_at_trx_commit=2

innodb_strict_mode=1log-bin=mysql-binmax-connections=500

max_allowed_packet=48M

skip_slave_start

sync_binlog=0

Next, here is the sysbench command used to generate load on each schema. We run 30 of these simultaneously varying the database name for each invocation. This example is for the I/O-bound case.

sysbench --test=oltp --db-driver=mysql --mysql-db=${db} \ --mysql-user=msandbox --mysql-password=msandbox \

--mysql-host=127.0.0.1 --mysql-port=33306 \

--oltp-read-only=off --oltp-table-size=10000000 \

--oltp-index-updates=4 --oltp-non-index-updates=2 \

--max-requests=200000 \

--max-time=3600 --num-threads=5 run

The replicator configuration is given in the slides for the talk, but here it is again. Options in red are required for sandboxes. Production installations are therefore simpler than what is shown here.

tools/tungsten-installer tools/tungsten-installer --direct -a \

--service-name=parallel --native-slave-takeover \

--master-host=127.0.0.1 --master-port=33306 \

--master-user=msandbox --master-password=msandbox \

--slave-host=127.0.0.1 --slave-port=33307 \

--slave-user=msandbox --slave-password=msandbox \

--home-directory=/opt/continuent \

--property=replicator.store.parallel-queue.maxOfflineInterval=5 \

--svc-parallelization-type=disk --buffer-size=100 \

--channels=30 --thl-port=2115 --rmi-port=10010 \

--skip-validation-check=MySQLPermissionsCheck \

--skip-validation-check=MySQLApplierServerIDCheck \

--start-and-report

To equal the results shown above you will also need to assign databases explicitly to channels in the shard.list file. Otherwise, databases will be assigned channels using a hashing function, which tends to result in somewhat uneven distributions. Look in the comments of the shard.list file for instructions on how to do this.

Finally, all of our tests depend on two excellent tools from Giuseppe Maxia: MySQL Sandbox and the new Large Data Generator program in the Tungsten Toolbox. Once you get the hang of them you will become completely addicted as they make test setup both reliable as well as quick.

12 comments:

It's really great to see Tungsten getting air time. Thanks for the effort and analysis!

Thanks for sharing the results, would definitely be interesting to see round two of mysql native replication vs tungsten replicator but with SSD in place :)

Thank you for including the time-series graphs instead of just a single number. The spikes and notches are interesting. Have you tracked down the source of that? Is it due to MySQL, or Tungsten?

First of all you are all welcome!

@Baron, if you mean the notches that are particularly prominent in the first graph I had assumed they correspond to checkpoints in InnoDB. However, as I recall from one of your Xaprb articles InnoDB does fuzzy checkpoints usually so you would not expect these stalls.

It is interesting that both MySQL replication and Tungsten get the same behavior. Moreover if you multiply out the area under the graph it's about one GB, which is also the binlog size. So, perhaps there's something related to binlogs at work here. One possibility I need to check is that my algorithm for counting bytes somehow marks the progress incorrectly when the slave moves to transactions from the next binlog.

Meanwhile the ugly blue line for Tungsten on the second graph is an artifact of the way Tungsten parallel replication marks progress. Overall progress is based on the oldest committed transaction is reported. This number may appear not to budge across several seconds when there are a lot of channels, because inactive channels do not update their position unless they receive a transaction. Tungsten generates fake transactions periodically to force updates on all channels, but it makes the graph very choppy and requires a trend line to sort out.

Hi Robert,

Are your table columns reversed for I/O bound numbers? Looks like it should be MySQL:228 and Tungsten:51 according to the graph.

Cool article. We might get to a point of checking this out at Vimeo some day. It is good to see the numbers.

Austin

@Austin, ouch and thanks! The numbers are indeed reversed. Should be fixed now.

Robert: good point about the binlog being 1GB. I think this is one of the issues in Domas' MySQL at Facebook talk (same talk that you sometimes hear being given by Mark too) that MySQL takes some global lock while rotating the binlogs. I might remember wrong, but it seems like a good guess at least.

@hingo, It also struck me as suspicious. On the other hand the graphs track slave apply position, which is decoupled from the master read position in both MySQL (it's the relay thread) and Tungsten (it's a couple stages up the replication pipeline, where we extract from the master). In both cases slaves can read from the master a lot faster than they can apply SQL, so delays on the master should not be visible. Hence my curiosity about the measurements.

Happily the overall timings are not in doubt. Giuseppe uses a completely different way of counting the elapsed time and gets the same or even better results.

@Robert: But your slaves are writing a binlog too?

@hingo, Good guess but I'm unable to find any evidence this is the case. Slave updates are unlogged. The mystery deepens!

have you compared jdbc driver perf? im pretty sure there are lots of improvements i could do regarding garbage collection in drizzle-jdbc

@Marcus, the #1 thing for performance on the JDBC driver is to get real support for statement batching at the network level in order to reduce the number of round trips. We have a prototype of this already implemented but have not profiled it very extensively yet. Otherwise JDBC batches end up doing a round trip for each statement, which our profiling indicates is a performance hit for cache-resident workloads.

Post a Comment